Machine Learning Projects

An interest of mine is learning about how machine learning works and how it can be best applied. I am currently taking a senior-level university course in machine learning along with some online courses for tools like TensorFlow.

Here I will keep track of my projects, major learnings, and accomplishments.

Updates and Progress

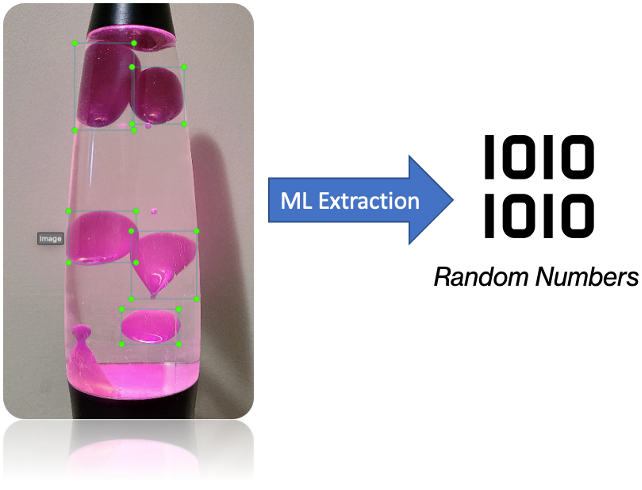

Below is a paper for a project I did involving random number generation and deep learning.

I have finished my first class in machine learning and wanted to share some of the major topics I learnt about and the projects I completed.

- 1. Associative Memory Used a linear associator for network architecture with Hebb's rule to develop formula for

weight matrix. Requires orthogonal inputs for perfect recall. Also applied the pseudo-inverse

rule to avoid calculating inverse for large matrices.

- 2. Steepest Descent Algorithm Used teh steepest descent algorithm to develop a more general approach for machine

learning. Learnt how performance measures such as the mean square error can be used to evaluate

the performance of a network. Calculated eigen values to find the maximum stable learning rate

for the steepest descent algorithm.

- 3. Least Mean Square Algorithm Was taught about Widrow-Hoff learning method and used the least mean square algorithm

to train neurons. Used delays with adaline networks to implement adaptive filtering.

- 4. Backpropogation Algorithms Learnt how network error can have its sensitivity backpropogated to update weights of

neurons. Used networks with backpropogation to estimate sinusoidal functions.

- 5. Fuzzy Logic Studied fuzzy logic and applications. Learnt about different membership functions and different

logical operations such as the cartesian product, max-min composition, max-product composition,

and fuzzy if-then rules. Used different defuzzification methods such as centroids, weighted averages,

smallest of max, largest of max, and mean of max.

I also computed several projects where I got to apply these learning techniques:

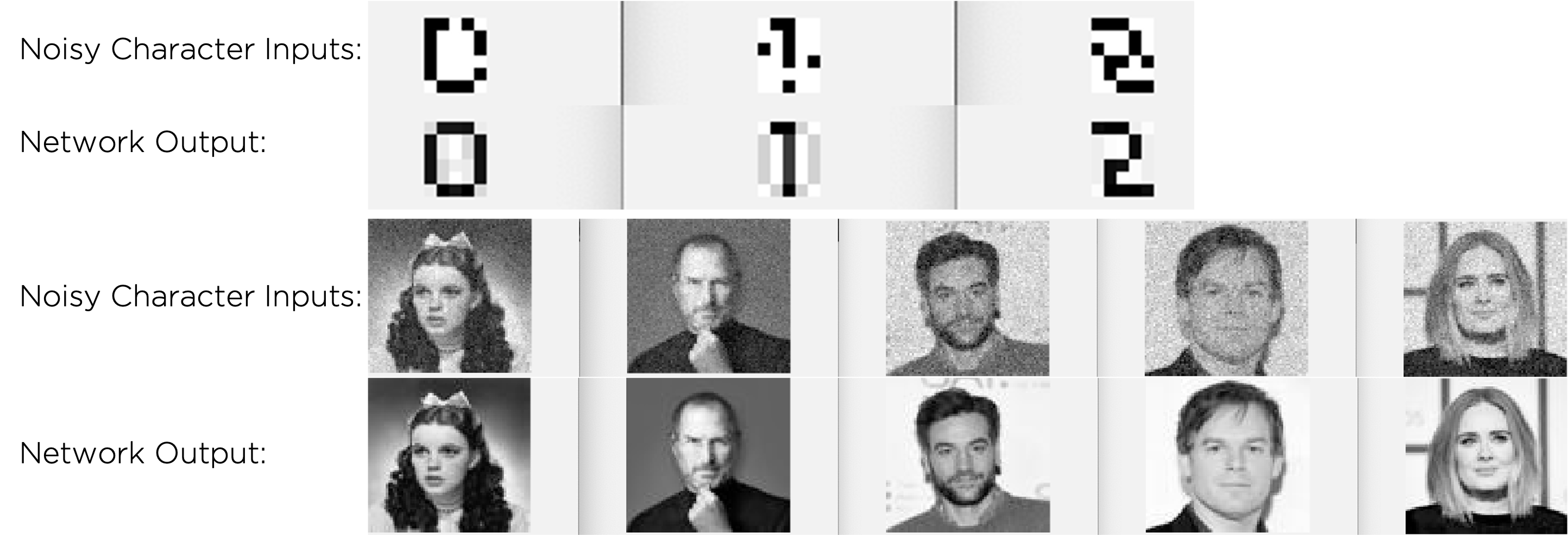

- 1. Hebbian/Psuedo-Inverse Recognition Used Hebbian/Psuedo-Inverse rules to train a neural network that could perform basic

digit recognition. Applied same rules to perform basic image recognition from noisy images.

- 2. LMS Character Recognition Used the LMS learning algorithim to classify images of noisy letters.

- 3. Least Mean Square Algorithm Was taught about Widrow-Hoff learning method and used the least mean square algorithm

to train neurons. Used delays with adaline networks to implement adaptive filtering.

- 4. Backpropogation Algorithms Designed a backpropogation network to predict time series stock market data.

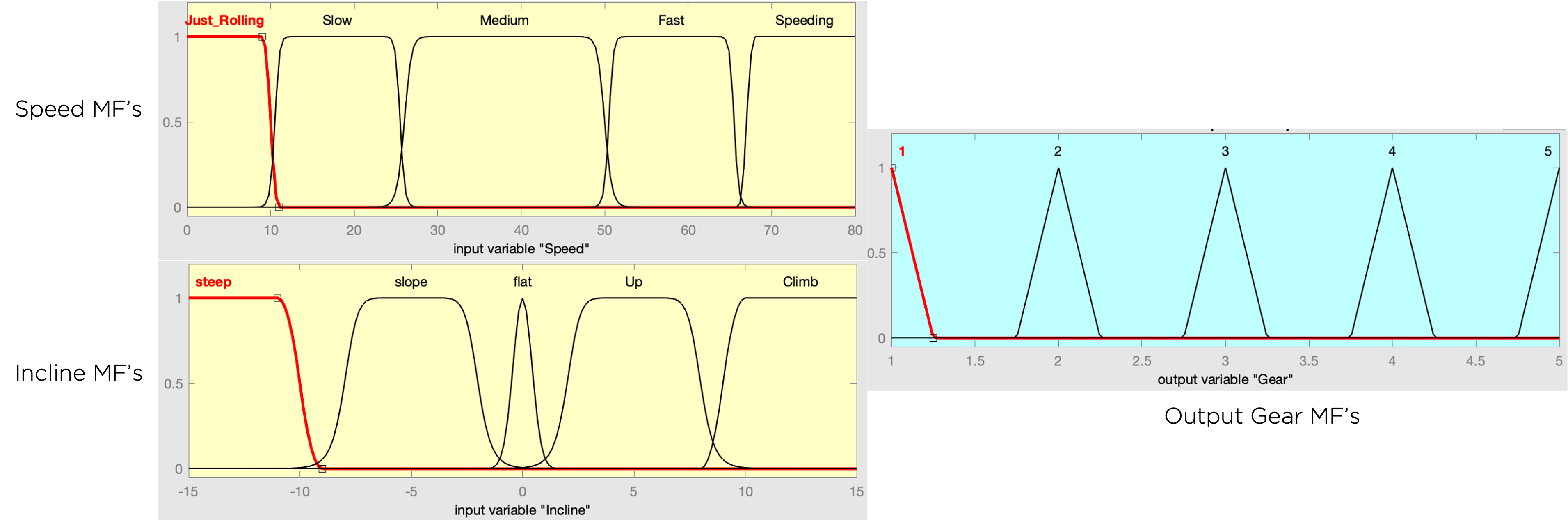

- 5. Fuzzy Inference System Designed a fuzzy inference system to select 1 of 5 gears for a car depending on

the speed and incline of the car. Selected membership functions and implemented system rules

using Matlab Fuzzy Logic Designer.

- 6. CNN Weather Classification System Used TensorFlow with image preprocessing techniques to design a convolutional

neural net. Trained and applied on images for weather classification.

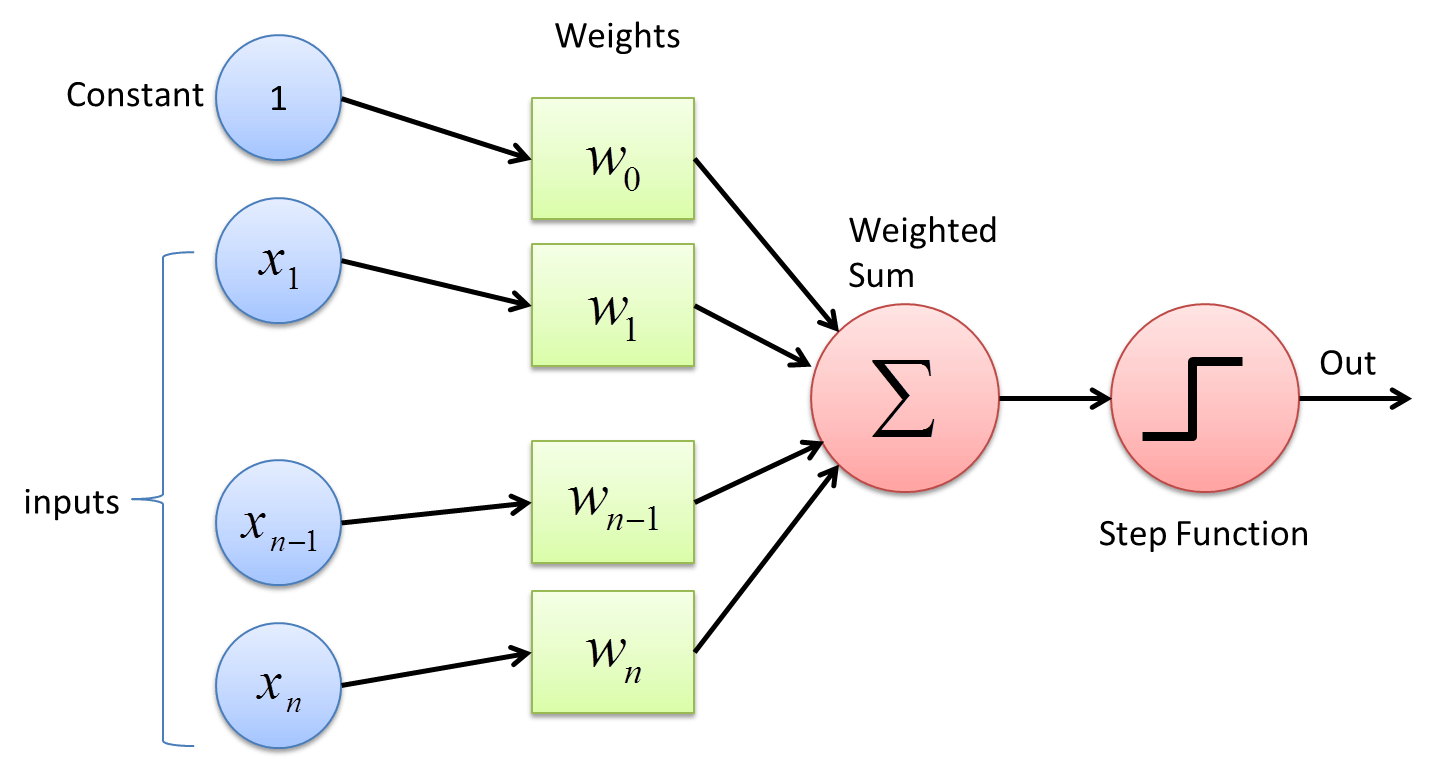

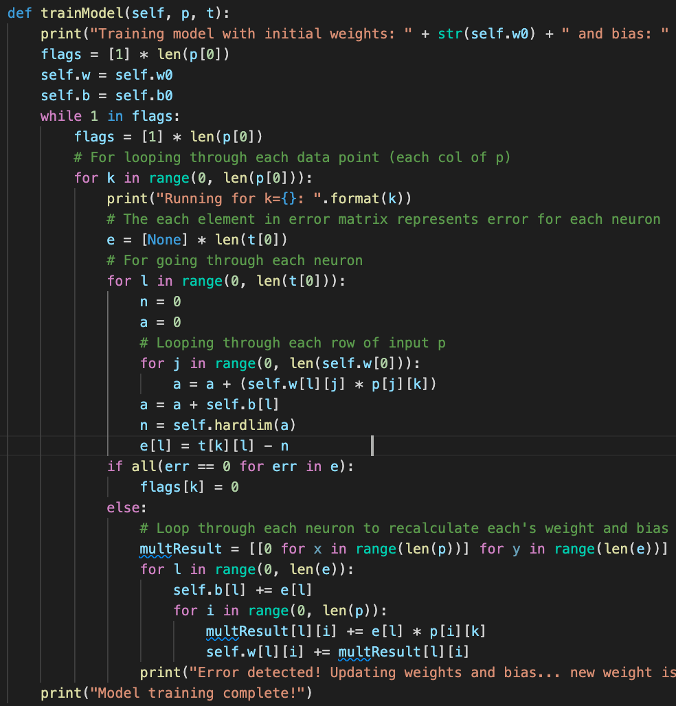

I wanted to challenge myself to write a perceptron class that could be trained with n labels and m features without using any packages like NumPy. My preliminary testing has proven successful, however the time complexity of the model training function likely has many opportunities for improvement.

Completely this mini-project has given me a fundamental understanding of how perceptron's function, and I look forward to incorporating this baseline knowledge to future problems.

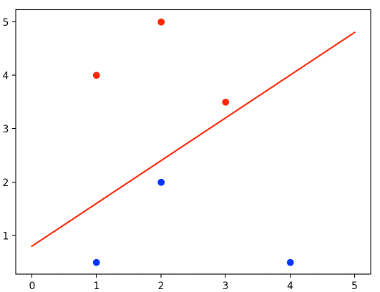

After learning about perceptron's in class, I tried my hand at coding a class for a simple single neuron

perceptron. Below is the graph demonstrating the classification decision the algorithm computes. While this

is a very simple example, I next want to try writing a generalized perceptron that can take n features

that can be classified into m labels.